This is a report of a meeting of the Archives Portal Europe Country Managers’ in Slovakia, 30 November 2016, with some comments and views from the UK and Archives Hub perspective.

Context

The APE Foundation (APEF), which was created following the completion of the APEx project (an EC funded project to maintain and develop the portal running from 2012 to 2015), is now taking APE forward. It has a Governing Board and working groups for standards, technical issues and PR/comms. The APEF has a coordinator and three technical/systems staff as well as an outreach officer. Institutions are invited to become associate members, to help support the portal and its aims.

Things are going well for APEF, with a profit recorded for 2016, and growing associate membership. APEF continues to be busy with development of APE, and is endeavouring to encourage cooperation and collaboration as a means to seize opportunities to keep developing and to take advantage of EU funding opportunities.

Current Development

The APEF has the support of Ministry of Culture in the Netherlands and has a close working relationship with the Netherlands national aggregation project, the ‘DTR’, which is key to the current APE development phase. The idea is to use the framework of APE for the DTR, benefitting both parties. Cooperation with DTR involves three main areas:

• building an API to open up the functionality of APE to third parties (and to enable the DTR to harvest the APE data from The Netherlands)

• improving the uploading and processing of EAC-CPF

• enabling the uploading and processing of ‘additional finding aids’

The API has been developed so that specific requests can be sent to fetch selected data. It is possible to do this for EAD (descriptions) and EAC-CPF (names). The API provides raw data as well as processed results. There have been issues around things like relevance of ordering of results which is a substantial area of work that is being addressed.

The API raises implications in terms of the data, as the Content Provider Agreement that APE institutions sign gives control of the data to the contributors. So, the API had to be implemented in a way that enables each contributor to give explicit permission for the data to be available as CC0 (fully open data). This means that if a third party uses the API to grab data, they only get data from a country that has given this permission. APEF has introduced an API key, which is a little controversial, as it could be argued that it is a barrier to complete openness, but it does enable the Foundation to monitor use, which is useful for impact, for checking correct use, and blocking those who misuse the API. This information is not made open, but it is stored for impact and security purposes.

There was some discussion at the meeting around open data and use of CC0. In countries such as Switzerland it is not permitted to open up data through a CC0 licence, and in fact, it may be true to say that CC0 is not the appropriate licence for archival descriptions (the question of whether any copyright can exist in them is not clear) and a public domain licence is more appropriate. When working across European countries there are variations in approaches to open data. The situation is complicated because the application of CC0 for APE data is not explicit, so any licence that a country has attached to their data will effectively be exported with the data and you may get a kind of licence clash. But the feeling is that for practical purposes if the data is available through an API, developers will expect it to be fully open and use it with that in mind.

There has been work to look at ways to take EAC-CPF from a whole set of institutions more easily, which would be useful for the UK, where we have many EAC-CPF descriptions created by SNAC. Work on any kind of work to bring more than one name description for the same person together has not started, and is not scheduled for the current period of development, but the emphasis is likely to be on better connectivity between variations of a name rather than having one description per name.

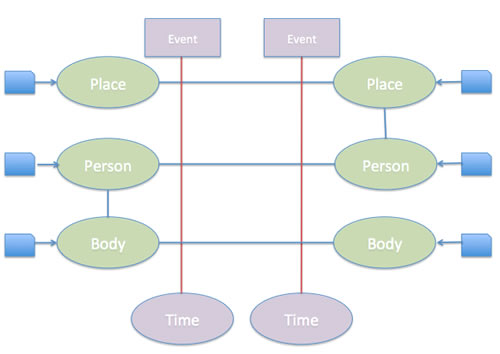

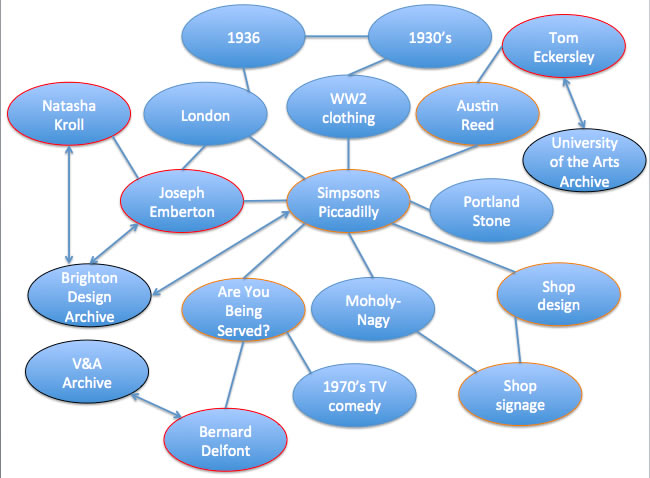

Additional finding aids offer the opportunity to add different types of information to APE. You may, for example, have a register of artists or ships logs, you may have started out with a set of cards with names A-Z, relating to your archive in some way. You could describe these in one EAD description, and link this to the main description. In the current implementation of EAD2002 in APE this would have to go into a table in Scope & Content and in-line tagging is not allowed to identify parts of the data. This leads to limitations with how to search by name. But then EAD3 gives the option to add more information on events and names. You can divide a name up into parts, which allows for better searching. Therefore APE is developing a new means to fetch and process EAD3 for the additional finding aids alongside EAD2002 for ‘standard’ finding aids. In conjunction with this, the interface needs to be changed to present the new names within the search.

The work on additional finding aids may not be so relevant for the Archives Hub as a contributor to APE, as the Hub cannot look at taking on ‘other finding aids’, with all the potential variations that implies. However, institutions could potentially log into APE themselves and upload these different types of descriptions.

APE and Europeana

There was quite a bit to talk about concerning APE and Europeana. The APEF is a full partner of the Europeana Digital Services Infrastructure 2 (DSI2) project (currently running 2016/2017). The project involves work on the structure for Europeana, maintaining and running data and aggregation services, improving data quality, and optimising relations with data partners. The work APE is involved with includes improving the current workflow for harvest/ingest of data, and also evaluating what has already been ingested into Europeana.

Europeana seems to have ongoing problems dealing with multi-level EAD descriptions, compounded by the limitation that they only represent digital materials. The approach is not a good fit for archives. Europeana have also introduced both a new publishing framework and different rights statements.

The new publishing framework is a 4 tier approach where you can think of Europeana as a more basic tool for promoting your archives, or something that is a platform for reuse. It refers to the digital materials in terms of whether they are a certain number of pixels, e.g. 800 pixels wide for thumbnails (adding thumbnails means using Europeana as a ‘showcase’) and 1,200 pixels wide ( high quality and reusable, using Europeana as a distribution and reuse platform). The idea of trying to get ‘quality’ images seems good, but in practice I wonder if it simply raises the barrier too much.

The new Rights statements require institutions to be very clear about the rights they want to apply to digital content. The likely conclusion of all this from the point of view of the Archives Hub is that we cannot grapple with adding to Europeana on behalf of all of our contributors, and therefore individual contributors will have to take this on board themselves. It will be possible for contributors to log into the APE dashboard (when it has been changed to reflect the Europeana new rights) and engage with this, selecting the finding aids, the preferred rights statements, and ensuring that thumbnail and reusable images meet the requirements. One the descriptions are in APE they can then be supplied to Europeana. The resulting display in Europeana should be checked, to ensure that it is appropriate.

We discussed this approach, and concluded that maybe APE contributors could see Europeana as something that they might use to showcase their content, so, think of it on our terms, as archives, and how it might help us. There is no obligation to contribute, so it is a case of making the decision whether it is worth representing the best visual archives through Europeana or whether this approach takes more effort than the value that we get out of it. After 10 years of working with Europeana, and not really getting proper representation of archives, the idea of finding a successful way of contributing archives is appealing, but it seems to me that the amount of effort required is going to be significant, and I’m not sure if the impact is enough to warrant it.

Europeana are working on a new way of automated and real time ingest from aggregators and content providers, but this may take another year or more to become fully operational.

Outreach and CM Reports

Towards the end of the day we had a presentation from the new PR/communicaitons officer. Having someone to encourage, co-ordinate and develop ideas for dissemination should provide invaluable for APE. The Facebook page is full of APE activities and related news and events. You can tweet and use the hashtag #archivesportaleurope if you would like to make APE aware of anything.

We ended the day with reports from country managers, which, as always threw up many issues, challenges, solutions, questions and answers. Plenty to set up APEF for another busy year!

Save

Save