“Will you browse around my website”, said the spider to the fly,

‘Tis the most attractive website that you ever did spy”

All of us want to provide attractive websites for our users. Of course, we’d like to think its not really the spider/fly kind of relationship! But we want to entice and draw people in and often we will see our own website as our key web presence; a place for people to come to to find out about who we are, what we have and what we do and to look at our wares, so to speak.

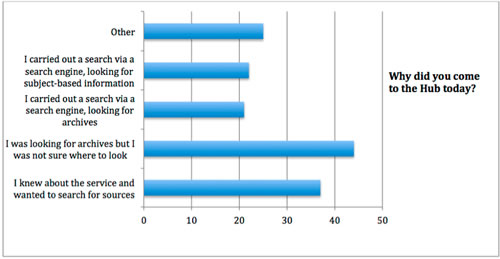

The recently released ‘Discovery’ vision is to provide UK researchers with “easy, flexible and ongoing access to content and services through a collaborative, aggregated and integrated resource discovery and delivery framework which is comprehensive, open and sustainable.” Does this have any implications for the institutional or small-scale website, usually designed to provide access to the archives (or descriptions of archives) held at one particular location?

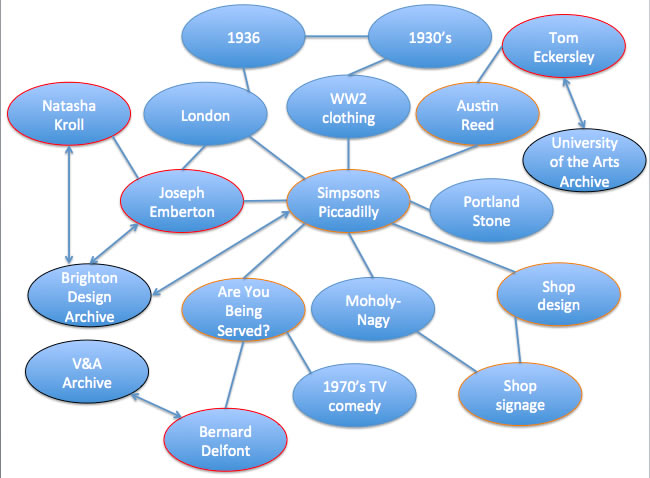

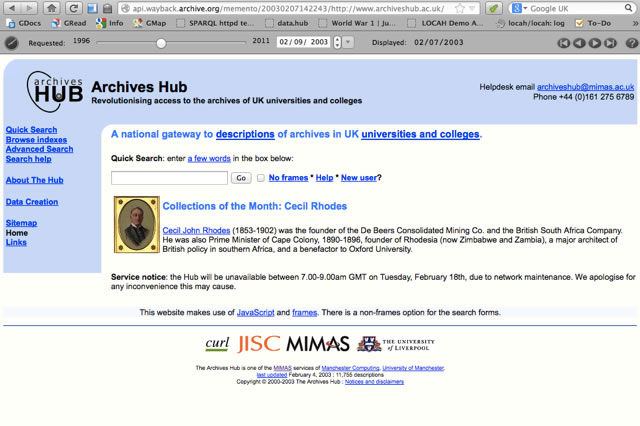

Over the years that I’ve been working in archives, announcements about new websites for searching the archives of a specific institution, or the outputs of a specific project have been commonplace. A website is one of the obvious outputs from time-bound projects, where the aim is often to catalogue, digitise or exhibit certain groups of archives held in particular repositories. These websites are often great sources of in-depth information about archives. Institutional websites are particularly useful when a researcher really wants to gain a detailed understanding of what a particular repository holds.

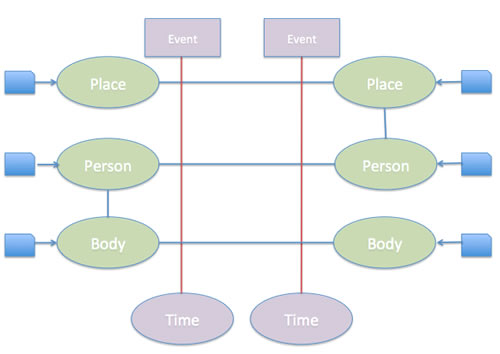

However, such sites can present a view that is based more around the provider of the information rather than the receiver. It could be argued that a researcher is less likely to want to use the archives because they are held at a particular location, apart from for reasons of convenience, and more likely to want archives around their subject area, and it is likely that the archives which are relevant to them will be held in a whole range of archives, museums and libraries (and elsewhere). By only looking at the archives held at a particular location, even if that location is a specialist repository that represents the researcher’s key subject area, the researcher may not think about what they might be missing.

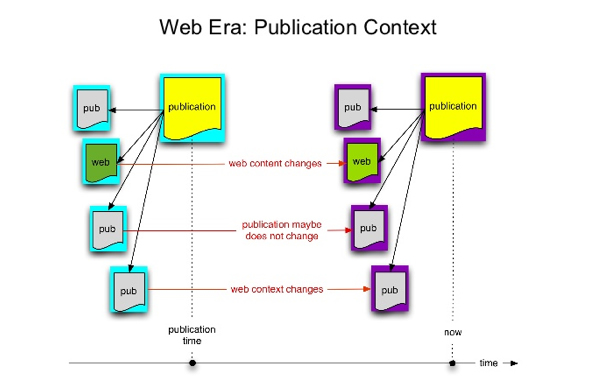

Project-based websites may group together archives in ways that benefit researchers more obviously, because they are often aggregating around a specific subject area. For example, making available the descriptions and links to digital archives around a research topic. Value may be added through rich metadata, community engagement and functionality aimed at a particular audience. Sometimes the downside here is the sustainability angle: projects necessarily have a limited life-span, and archives do not. They are ever-changing and growing and descriptions need to be updated all the time.

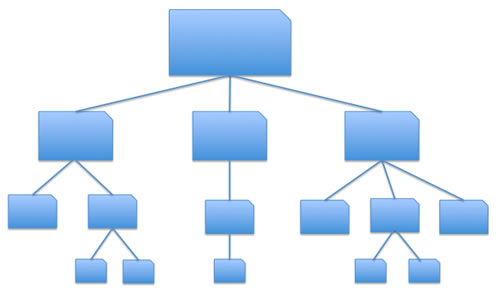

So, what is the answer? Is this too much of a silo-type approach, creating a large number of websites, each dedicated to a small selection of archives?

Broader aggregation seems like one obvious answer. It allows for descriptions of archives (or other resources) to be brought together so that researchers have the benefit of searching across collections, bringing together archives by subject, place, person or event, regardless of where they are held (although there is going to be some kind of limit here, even if it is at the national level).

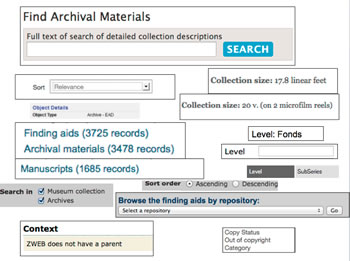

You might say that the Archives Hub is likely to be in favour of aggregation! But it’s definitely not all pros and no cons. Aggregations may offer a powerful search functionality for intellectually bringing together archives based on a researcher’s interests, but in some ways there is a greater risk around what is omitted. When searching a website that represents one repository, a researcher is more likely to understand that other archives may exist that are relevant to them. Aggregations tend to promote themselves as comprehensive – if not explicitly then implicitly – which this creates expectation that cannot ever fully be met. They can also raise issues around measuring impact and around licensing. There is also the risk of a proliferation of aggregation services, further confusing the resource discovery landscape.

Is the ideal of broad inter-disciplinary cross-searching going to be impeded if we compete to create different aggregations? Yes, maybe it will be to some extent, but I think that it is an inevitability, and it is valid for different gateways to service different audiences’ needs. It is important to acknowledge that researchers in different disciplines and at different levels have their own needs, their own specific requirements, and we cannot fulfill all of these needs by only presenting data in one way.

One thing I think is critical here is for all archive repositories to think about the benefits of employing recognised and widely-used standards, so that they can effectively interoperate and so that the data remains relevant and sustainable over time. This is the key to ensuring that data is agile, and can meet different needs by being used in different systems and contexts.

I do wonder if maybe there is a point at which aggregations become unwieldy, politically complicated and technically challenging. That point seems to be when they start to search across countries. I am still unsure about whether Europeana can overcome this kind of problem, although I can see why many people are so keen on making it work. But at present, it is extremely patchy, and , for example, getting no results for texts held in Britain relating to Shakespeare is not really a good result. But then, maybe the point is that Europeana is there for those that want to use it, and it is doing ground-breaking work in its focus on European culture; the Archives Hub exists for those interested in UK Archives and a more cross-disciplinary approach; Genesis exists for those interested in womens studies; for those interested in the Co-operative movement, there is the National Co-operative Archive site; for those researching film, the British Film Institute website and archive is of enormous value.

So, is the important principle here that diversity is good because people are diverse and have diverse needs? Probably so. But at the same time, we need to remember that to get this landscape, we need to encourage data sharing and avoid duplication of effort. Once you have created descriptions of your archive collections you should be able to put them onto your own website, contribute them to a project website, and provide them to an aggregator.

Ideally, we would be looking at one single store of descriptions, because as soon as you contribute to different systems, if they also store the data, you have version control issues. The ability to remotely search different data sources would seem to be the right solution here. However, there are substantial challenges. The Archives Hub has been designed to work in a distributed way, so that institutions can host their own data. The distributed searching does present challenges, but it certainly works pretty well. The problem is that running a server, operating system and software can actually be a challenge for institutions that do not have the requisite IT skills dedicated to the archives department. Institutions that hold their own data have it in a great variety of formats. So, what we really need is the ability for the Archives Hub to seamlessly search CALM, AdLib, MODES, ICA AtoM, Access, Excel, Word, etc. and bring back meaningful results. Hmmm….

The business case for opening up data seems clear. Project like Open Bibliographic Data have helped progress the thinking in this arena and raised issues and solutions around barriers such as licensing. But it seems clear that we need to understand more about the benefits of aggregation, and the different approaches to aggregation, and we need to get more buy-in for this kind of approach. Does aggregation allow users to do things that they could not do otherwise? Does it save them time? Does it promote innovation? Does it skew the landscape? Does it create problems for institutions because of the problems with branding and measuring impact? Furthermore, how can we actually measure these kinds of potential benefits and issues?

Websites that offer access to archives (or descriptions of archives) based on where they are located and based on they body that administers them have an important role to play. But it seems to me that it is vital that these archives are also represented on a more national, and even international stage. We need to bring our collections to where the users are. We need to ensure that Google and other search engines find our descriptions. We need to put archives at the heart of research, alongside other resources.

I remember once talking about the Archives Hub to an archivist who ran a specialist repository. She said that she didn’t think it was worth contributing to the Hub because they already had their own catalogue. That is, researchers could find what they wanted via the institute’s own catalogue on their own system, available in their reading room. She didn’t seem to be aware that this could only happen if they knew that the archive was there, and that this view rested on the idea that researchers would be happy to repeat that kind of search on a number of other systems. Archives are often about a whole wealth of different subjects – we all know how often there are unexpected and exciting finds. A specialist repository for any one discipline will have archives that reach way beyond that discipline into all sorts of fascinating areas.

It seems undeniable that data is going to become more open and that we should promote flexible access through a number of discovery routes, but this throws up challenges around version control, measuring impact, brand and identity. We always have to be cognisant of funding, and widely disseminated data does not always help us with a funding case because we lose control of the statistics around use and any kind of correlation between visits to our website and bums on seats. Maybe one of the challenges is therefore around persuading top-level managers and funders to look at this whole area with a new perspective?