For our Machine Learning experiments we are using Amazon Web Services (AWS). We thought it would be useful to explain what we have been doing.

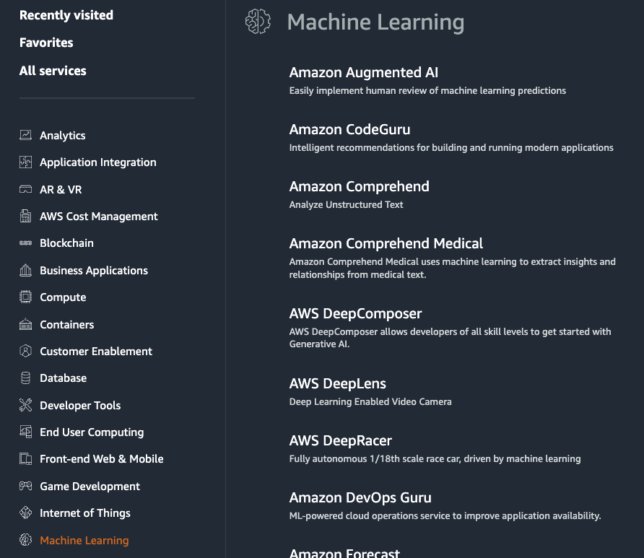

AWS, like most Cloud providers, gives you access to a huge range of infrastructure, services and tools. Typically, instead of having your own servers physically on your premises, you instead utlitise the virtual servers provided in the Cloud. The Cloud is a cost effective solution, and in particular it allows for elasticity; dynamically allocating resources as required. It also provides a range of features, and that includes a set of Machine Learning services and tools.

One of the services available is Amazon Rekognition. This is what we have used when writing our previous blog posts.

One of the things Rekognition does is object detection. We have written about using Rekognition in a previous post.

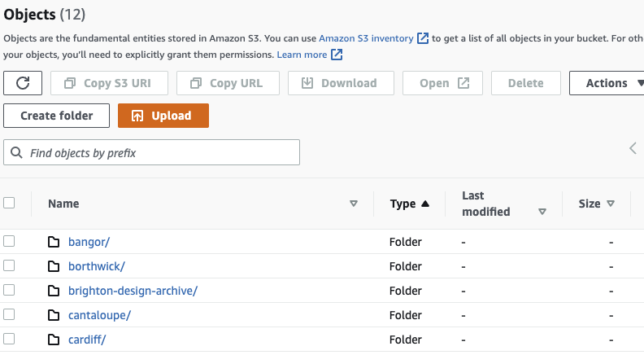

Our initial experiments were done on the basis of uploading single images at a time and looking at the output. The next step is to work out how to submit a batch of images and get output from that. AWS doesn’t have an interface that allows you to upload a batch. We have batches of images stored in the Cloud (using the ‘S3’ service), and so we need to pass sets of images from S3 to the Rekognition service and store the resulting label predictions (outputs). We also need to figure out how to provide these predictions to our contributors in a user friendly display.

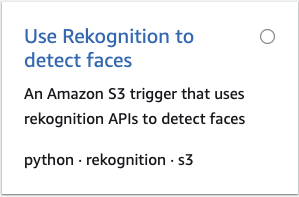

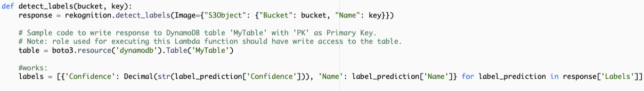

After substantial research into approaches that we could take, we decided to use the AWS Lambda and DynamoDB services along with Rekognition and S3. Lambda is a service that allows you to run code without having to set up the virtual machine infrastructure (it is often referred to as a serverless approach). We used some ‘blueprint’ Lambda code (written in Python) as the basis, and extended it for our purposes.

Using something like AWS does not mean that you get this type of facility out of the box. AWS provides the infrastructure and the interfaces are reasonably user friendly, but it does not provide a full blown application for doing Machine Learning. We have to do some development work in order to use Rekognition, or other ML tools, for a set of images.

Lambda is set up so the code will run every time an image is placed in the S3 bucket. It then passes the output (label prediction) to another AWS service, called DynamoDB, which is a ‘NoSQL’ database.

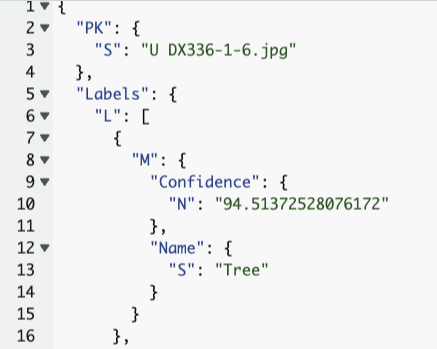

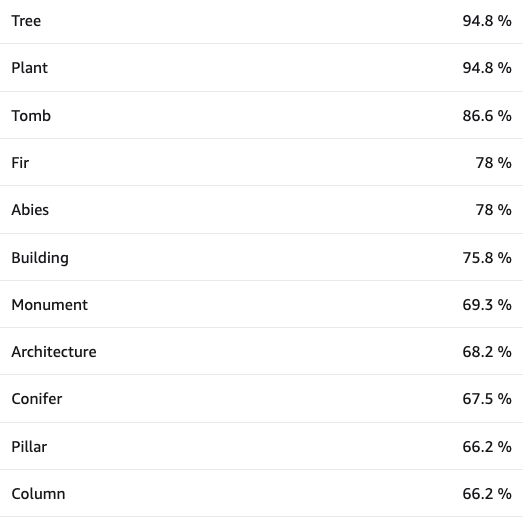

In the above image you can see an excerpt from the output from running the Lambda code. This is for image U DX336-1-6.jpg (see below) and it has predicted ‘tree’ with a confidence level of 94.51 percent. Ideally we wanted to add the ‘bounding box’ which provides the co-ordinates for where the object is within the image.

We spent quite a bit of time trying to figure out how to add bounding boxes, and eventually realised that they are only added for some objects – Amazon Rekognition Image and Amazon Rekognition Video can return the bounding box for common object labels such as cars, furniture, apparel or pets, but the information isn’t returned for less common object labels. Quite how things are classed as more or less common is not clear. At the moment we are working on passing the bounding box information (when there is any) to our database output.

Clearly for this image, it would be useful to have ‘memorial’ and ‘cross’ as label predictions, but these terms are absent. However, sometimes ML can provide terms that might not be used by the cataloguer, such as ‘tree’ or ‘monument’.

So we now have the ability to submit a batch of images, but currently the output is in JSON (the above output table is only provided if you upload the image individually). We are hoping to read the data and place the labels into our IIIF development interface.

The next step is to create a model using a subset of the images that our participants have provided. A key thing to understand is that in order to train a model so that it makes better predictions you need to provide labelled images. Therefore, if you want to try using ML, it is likely that part of the ML journey will require you to undertake a substantial amount of labelling if you don’t already have labelled images. Providing labelled content is the way that the algorithm learns. If we provided the above image and a batch of others like it and included a label of ‘memorial’ then that would make it more likely that other non-labelled images we input would be identified correctly. We could also include the more specific label ‘war memorial’ – but it would seem like a tall order for ML to distinguish war memorials from other types. Having said that, the fascinating thing is that often machines learn to detect patterns in a way that surpasses what humans can achieve. We can only give it a go and see what we get.

Thanks to Adrian Stevenson, one of the Hub Labs team, who took me through the technical processes outlined in this post.

Those tips are really helpful, and I’m glad you’re sharing them. Many of us who commented on this post are just starting to look for different ways to promote our blogs and this sounds promising. This is definitely an article about machine learning I will try to follow when I comment on others’ blogs. Cheers