“In 2009, the British Library and JISC commissioned the three-year Researchers of Tomorrow study, focusing on the information-seeking and research behaviour of doctoral students in ‘Generation Y’, born between 1982 and 1994 and not ‘digital natives’. Over 17,000 doctoral students from more than 70 higher education institutions participated in the three annual surveys, which were complemented by a longitudinal student cohort study.” (Taken from http://www.jisc.ac.uk/publications/reports/2012/researchers-of-tomorrow#exec_sum).

This post picks up on some aspects of the study, particularly those that are relevant to archives and archivists. I am assuming that archivists come into the category of libraries as being ‘library professionals’, at least to an extent, though our profession is not explicitly mentioned. I would recommend reading the report in full as it offers some useful insights into the research behaviour of an important group of researchers.

What is heartening about this study is that the findings confirm that generation Ydoctoral students are

“sophisticated information-seekers and users of complex information sources“. The study does conclude that information seeking behaviour is becoming less reliant on the support of libraries and library staff, which may have implications for the role of libraries and archive professionals, but “library staff assistance with finding/retrieving difficult-to-access resources” was seen as one of the most valuable research support resources available to students, although it was a relatively small proportion of students that used this service. There was a preference for this kind of on-demand 1-2-1 support rather than formal training sessions. One of the students said ” the librarians are quite possibly the most enthusiastic and helpful people ever, and I certainly recommend finding a librarian who knows their stuff, because I have had tremendous amounts of help with my research so far, just simply by asking my librarian the right question.”

The survey concentrated on the most recent information-seeking activity of students, and found that most were not seeking primary sources, but rather secondary sources (largely journal articles and books).

“This apparent and striking dependence on published research resources implies that, as the basis for their own analytical and original research, relatively few doctoral students in social sciences and arts and humanities are using ‘primary’ materials such as newspapers, archival material and social data.”

This finding was true across all subject disciplines and all ages. The study found that about 80% of arts and humanities students were looking for any bibliographic references on their topic or specific publications, while only 7% were looking for non-published archival material. It seems that this reliance on published information is formed early on in their research, as students lay the groundwork for their PhD. Most students said they used academic libraries more in their first year of study – whether visiting or using the online services, so maybe this is the time to engage with students and encourage them to use more diverse sources in the longer-term.

A point that piqued my interest was that the arts and humanities students visiting other collections in order to use archival sources would do so even if “many of the resources they required had been digitised“, but this point was not explained further, which was frustrating. Is it because they are likely to use a mixture of digital and non-digital sources? Or maybe if they locate digital content it stimulates their interest to find out more about using primary sources?

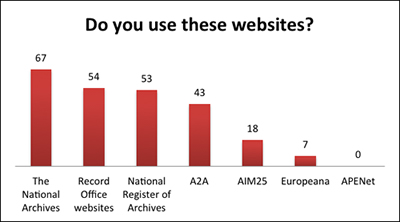

Around 30% used Google or Google Scholar as their main information gathering tool, although arts and humanities students sourced their information from a wider spread of online and offline sources, including library catalogues.

One thing that concerned me, and that I was not aware of, was the assertion that “current citation-based assessment and authenticity criteria in doctoral and academic research discourage the citing of non-published or original material“. I would be interested to know why this is the case, as surely it should be actively encouraged rather than discouraged? How does this fit with the need to demonstrate originality in research?

Students rely heavily on help from their supervisors early on in their research, and supervisors have a great influence on their information and resource use. I wonder if they actively encourage the use of primary sources as much as we would like? I can’t help thinking that a supervisor enthusiastically extolling the importance and potential impact of using archives would be the best way to encourage use.

There continues to be a feeling amongst students, according to this study, that “using social media and online forums in research lacks legitimacy” and that these tools are more appropriate within a social context. The use of twitter, blogs and social bookmarking was low (2009 survey: 13% of arts and humanities students had used and valued Twitter) and use was more commonly passive than active. There was a feeling that new tools and applications would not transform the way that the students work, but should complement and enhance established research practices and behaviour. However, it should be noted that use of ‘Web 2.0’ tools increased over the 3 years of the study, so it may be that a similar study carried out in 5 years time would show significantly different behaviour.

Students want training in research geared towards their own subject area, preferably face-to-face. Online tutorials and packages were not well used. The implication is that training should be at a very local level and done in a fairly informal way. Generic research skills are less appealing. Research skills training days are valued, but if they are poor and badly taught, the student feels their time is wasted and may be put off trying again. Students were quite critical of the quality and utility of the training offered by their university mainly because (i) it was not pitched at the right level (ii) it was too generic or (iii) it was not available on demand. Library-led training sessions got a more positive response, but students were far less likely to take up training opportunities after the first year of their PhD. Training in the use of primary sources was not specifically covered in the report, though it must be supposed this would be (should be!) included in library-led training.

The study indicated that students dislike reading (as opposed to scanning) on screen. This suggests that it is important to provide the right information online, information that is easy to scan through, but worth providing a PDF for printout, especially for detailed descriptions.

One quote stood out for me, as it seems to sum up the potential danger of modern ways of working in terms of approaches to more in-depth analysis:

“The problem with the internet is that it’s so easy to drift between websites and to absorb information in short easy bites that at times you forget to turn off the computer, rest your eyes from screen glare and do some proper in-depth reading. The fragments and thoughts on the internet are compelling (addictive, even), and incredibly useful for breadth, but browsing (as its name suggests) isn’t really so good for depth, and at this level depth is what’s required.” (Arts and humanities)

We do often hear about the internet, or computers, tending to reduce levels of concentration. I think that this point is subtly different though – it’s more about the type of concentration required for in-depth work, something that could be seen as essential for effective primary source research.

Conclusions

We probably all agree that we can always do more to to promote the importance of archives to all potential users, including doctoral students. Certainly, we need to make it easier for them to discovery sources through the usual routes that they use, so for one thing ensuring we have a profile via Google and Google Scholar. Too many archives still resist this requirement, as if it is somehow demeaning or too populist, or maybe because they are too caught up in developing their own websites rather than thinking about search engine optimisation, or maybe it is just because archivists are not sure how to achieve good search engine rankings?

Are we actively promoting a low-barrier, welcome and open approach? I recall many archive institutions that routinely state that their archives are ‘open to bone fide researchers only’. Language like that seems to me to be somewhat off putting. Who are the ‘non-bone fide’ researchers that need to be kept out? This sort of language does not seem conducive to the principle of making archives available to all.

The applications we develop need to be relatively easy to absorb into existing research work practices, which will only change slowly over time. We should not get too caught up in social networks and Web 2.0 as if these are ‘where it’s at’ for this generation of students. Maybe the approaches to research are generally more traditional than we think.

The report itself concludes that the lack of use of primary sources is worrying and requires further investigation:

“There is a strong case for more in-depth research among doctoral students to determine whether the data signals a real shift away from doctoral research based on primary sources compared to, say, a decade ago. If this proves to be the case there may be significant implications for doctoral research quality related to what Park described as “widely articulated tensions between product (producing a thesis of adequate quality) and process (developing the researcher), and between timely completion and high quality research“.