I was lucky enough to attend the 2012 EmTACL conference in Trondheim, and this blog is based around the excellent keynote presentation by Herbert van de Sompel, which really made me think about temporal issues with the Web and how this can limit our understanding of the scholarly record.

Herbert believes that the current infrastructure for scholarly communication is not up to the job. We now have many non-traditional assets, which do not always have fixity and often have a wide range of dependencies; assets such as datasets, blogs, software, videos, slides which may form part of a scholarly resource. Everything is much more dynamic than it used to be. ‘Research objects’ often include assets that are interdependent with each other, so they need to be available all together for the object to be complete. But this is complicated by the fact that many of them are ‘in motion’ and updated over time.

This idea of dynamic resources that are in flux, constantly being updated, is very relevant for archivists, partly because we need to understand how archives are not static and fixed in time, and partly because we need to be aware of the challenges of archiving ever more complex and interconnected resources. It is useful to understand the research environment and the way technology influences outputs and influences what is possible for future research.

There are examples of innovative services that are responding to the opportunities of dynamic resources. One that Herbert mentioned was PLOS, which publishes open scholarly articles. It puts publications into Wikipedia as well as keeping the ‘static’ copy, so that the articles have a kind of second life where they continue to evolve as well as being kept as they were at the time of submission. For example, ‘Circular Permutation in Proteins‘.

The idea of executable papers is starting to become established – papers that are not just to read but to interact with. These contain access to the primary data with capabilities to re-execute algorithms and even capabilities to allow researchers to upload and use their own data. It produces a complex interdependency and produces a challenge for archiving because if something is not fixed in time, what does that mean for retaining access to it over time?

This all raises the issue of what the scholarly record actually is. Where does it start? Where does it end? We are no longer talking about a bunch of static files but a dynamic interconnected resource. In fact, there is an increasing sense that the article itself is not necessarily the key output, but rather it is the advertising for the actual scholarship.

Herbert concluded from this that it becomes very important to be able to view different points in time in the evolution of scholarly record, and this should be done in a way that works with the Web. The Web is the platform, the infrastructure for the scholarly record. Scholarly communication then becomes native to the Web. At the heart of this is the need to use HTTP URIs.

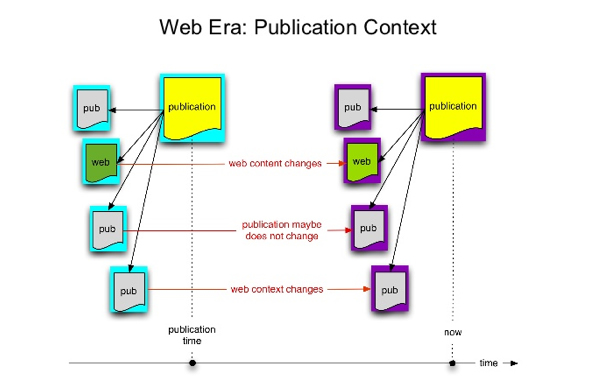

However, where are we at the moment? The current archival infrastructure for scholarly outputs deals with things with fixity and boundaries. It cannot deal with things in flux and with inter-dependencies. The Web exists in ‘now’ time; it does not have a built in notion of time. It assumes that you want the current version of something – you cannot use a URI to get to a prior version.

We don’t really object to this limitation, something evidenced by the fact that we generally accept links that take us to 404 pages, as if it is just an inevitable inconvenience. Maybe many people just don’t think that there is any real interest in or requirement for ‘obsolete’ resources, and what is current is what is important on the Web.

Of course, there is the Internet Archive and other similar initiatives in Web archiving, but they are not integrated into the Web. You have to go somewhere completely different in order to search for older copies of resources.

If the research paper remains the same, but resources that are an integral part of it change over time, then we need to change archiving to reflect this. We need to think about how to reference assets over time and how to recreate older versions. Otherwise, we access the current version, but we are not getting the context that was there at the time of creation; we are getting something different.

Can we recreate a version of a scholarly record? Can we go back to certain point it time so we can see linked assets from a paper as they were at the time of publication? At the moment we are likely to get many 404s when we try to access links associated with a publication. Herbert showed one survey on the decay of URLs in Medline, which is about 10% per year, especially with links to thinks like related databases.

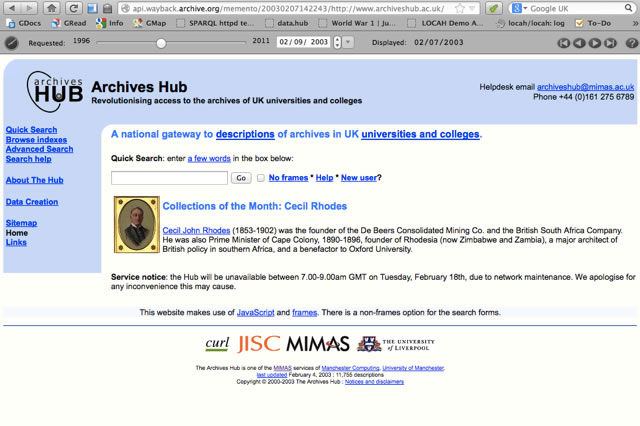

One solution to this is to be able to follow a URI in time – to be able to click on URI and say ‘I want to see this as was 2 years ago’. Herbert went on to talk about something he has created called Memento. Memento aims to better integrate the current and past Web. It allows you to select a day or time in the browser and effectively take the URI back in time. Currently, the team are looking at enabling people to browse past pages of Wikipedia. Memento has a fairly good success rate with going back to retrieve old versions, although it will not work for all resources. I tried it with the Archives Hub and found it easy to take the website back to how it looked right in the very early days.

One issue is that the archived copies are not always created near the time of publication. But for those that are, they are created simply as part of the normal activity of the Web, by services like the Internet Archive or the British Library, so there is no extra work involved.

Herbert outlined some of the issues with using DOIs (digital object identifiers), which provide identifiers for resources that use a resolver to ensure that the identifier can remain the same over time. This is useful if, for example, a publisher is bought out – the identifier is still the same as the resolver redirects to the right location However, a DOI resolver exists in the perpetual now. It is not possible to travel back in time using HTTP URIs. This is maybe one illustration of the way some of the processes that we have implemented over the Web do not really fulfil our current needs, as things change and resources become more complex and dynamic.

With Memento, the same HTTP URI can function as the reference to temporally evolving resources. The importance of this type of functionality is becoming more recognised. There is a new experimental URI scheme, DURI , or Dated URI. The ideas is that a URI, such as http://www.ntnu.no, can be dated: 1997-06-17:http://www.ntnu.no (this is an example and is not actionable now). Herbert did raise another possibly of developing Websites that can deal with the TEL (telephone) protocol. The idea would be that the browser asks you whether the Website can use the TEL protocol, and if it can, you get this option offered to you. You can then use this and reference a resource and use Memento to go back in time.

Herbert concluded that the idea of ‘archiving’ should not be just a one-off event, but needs to happen continually. In fact, it could happen whenever there is an interaction. Also, when new materials are taken into a repository, you could scan for links and put them into an archive, so the links don’t die. If you archive the links at the time of publication or when materials submitted to a repository, then you protect against losing the context of the resource.

Herbert introduced us to SiteStory, which offers transactional archiving of a a web server. Usually a web archive sends out a robot, gathers and dumps the data. With SiteStory the web server takes an active part. Every time a user requests a page it is also pushed back into the archive, so you get a fine grained history of the resource. Something like this could be done by publishers/service providers, with the idea that they hold onto the hits, the impact, the audience. It certainly does seem to be a growing area of interest.

Herbert’s slides are available on Slideshare.